You can download an example project from this GitHub repository.

Task interview...

My friend got an interview task to code a method that finds and returns the most frequent element in a large list of integers. If two elements are found, null is returned. That task took him sometime around 30-40 minutes.

I stopped for a moment, thought about my answer and after a couple of seconds, I told him that I would use LINQ with simple GroupBy() and Count() calls. That's easy and very readable, it may be not the most performant solution, but it took me a couple of minutes to implement not half an hour!

And then He told me that there were not only unit tests already implemented by that company, but also performance tests! So already I failed that interview ;) It inspired me to compare his dictionary-based approach and my LINQ-based approach.

LINQ approach

This approach is very easy to understand and read. Just group list elements, select the max count from selected groups and then check if there is more than 1 group matching maximal elements count.

public int? GetMostFrequentWithLinq()

{

int? result = null;

try

{

var groups = dataSet.GroupBy(x => x);

var maxCount = groups.Max(x => x.Count());

if(groups.Count(x => x.Count() == maxCount) > 1)

{

result = null;

}

else

{

result = groups

.FirstOrDefault(x => x.Count() == maxCount)?

.Key;

}

}

catch (Exception)

{

result = null;

}

return result;

}

Stackoverflow approach - Lookup collection

This solution was suggested by nawfal in this Stackoverflow question. I just changed this to match task description.

public int? GetFromStackoverflow()

{

int? result = null;

var dict = dataSet.ToLookup(x => x);

if (dict.Count == 0)

return null;

var maxCount = dict.Max(x => x.Count());

try

{

result = dict

.Where(x => x.Count() == maxCount)

.Select(x => x.Key)

.SingleOrDefault();

}

catch (Exception)

{

result = null;

}

return result;

}

Dictionary (friend's) approach

And here it is. Most optimal and working solution. To be honest I didn't saw this kind of code since the end of my career at Technical University. It's pretty low-leveled in opposition to LINQ. It's not that hard to understand after maybe 2-3 readings but beautiful and performant.

First of all, we check if there is anything in that dataset to check, if not we return null.

Then we are iterating on that dataset and if we have a new one we are adding a new element to our dictionary. Where the key is the actual list element, in our case int, and its value is the number of encounters. If the current value from iteration is present in our dictionary we are incrementing the dictionary's item value. If that element's value is bigger than the current biggest value we are changing mostCounted and biggestCount variables for new ones.

After iterating the whole dataset we are checking if there is more than one element in the dictionary that matches biggestCount if so we return null according to the task description.

public int? GetMostFrequentWithDictionary()

{

if (dataSet == null || dataSet.Count() == 0)

return null;

int? mostCounted = null;

int biggestCount = 0;

Dictionary<int, int> dict = new Dictionary<int, int>();

foreach (var value in dataSet)

{

if (!dict.TryGetValue(value, out int foundValue))

{

dict[value] = 1;

}

else

{

dict[value] = foundValue++;

}

if (dict[value] > biggestCount)

{

biggestCount = dict[value];

mostCounted = value;

}

}

if (dict.Any(x => x.Key != mostCounted && x.Value == biggestCount))

return null;

return mostCounted;

}

Tests

So after analyzing those 3 examples there is no magic in any of those solutions. But BenchmarkDotNet says differently.

I thought to myself that there is not going to be a huge difference. I couldn't make a bigger mistake :D

I ran benchmark tests for 10.000, 100.000 and 5.000.000 random generated integers without and with max values. Here are the results of the tests.

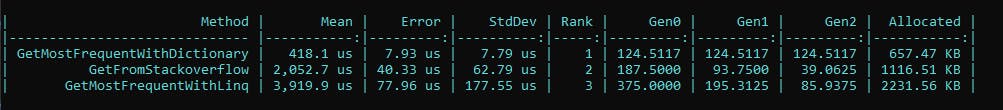

10.000 records, no max value on Random.Next()

Dictionary got: 418.1 us (us - microsecond), and 657.47 KB allocated memory.

Lookup got: 2,052.7 us and 1116.51 KB allocated memory.

Linq got: 3,919.9 us and 2231.56 KB allocated memory.

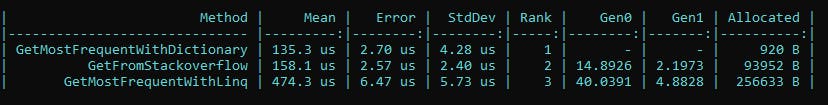

10.000 records, with the max value set to 10 (less data variety)

Dictionary got: 135.3 us (us - microsecond), and 920 B allocated memory.

Lookup got: 158.1 us and 93952 B allocated memory.

Linq got: 474.3 us and 256633 B allocated memory.

We already can observe that data variety is very important in the case of searching and grouping list elements. In this case with a smaller range of values for our dataset we can still use a much faster Dictionary. Using LINQ is nothing bad if you appreciate comfort.

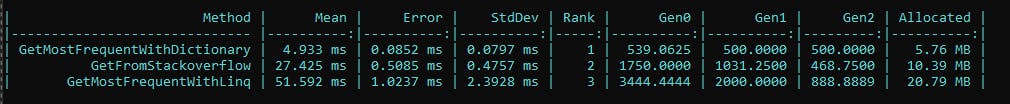

100.000 records, with no max

Dictionary got: 4.933 ms, and 5.76 MB allocated memory.

Lookup got: 27.425 ms and 10.39 MB allocated memory.

Linq got: 51.592 ms and 20.79 MB allocated memory.

This one starts to hurt me a lot ;(

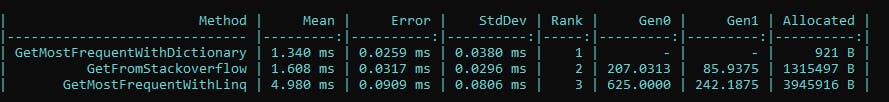

100.000 records, with the max set to 10

Dictionary got: 1.340 ms, and 921 B allocated memory.

Lookup got: 1.608 ms and 1315497 B allocated memory.

Linq got: 4.980 ms and 3945916 B allocated memory.

Less pain but let's see what happens at 5m records.

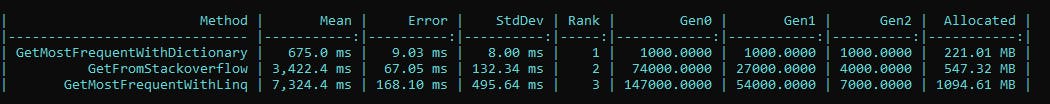

5.000.000 records, no max

Dictionary got: 675 ms, and 221.01 MB allocated memory.

Lookup got: 3,422.4 ms and 547.32 MB allocated memory.

Linq got: 7,324.4 ms and 1094.661 MB allocated memory.

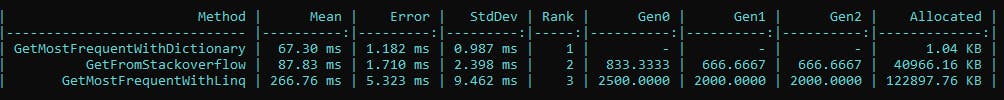

5.000.000 records, with max set to 10

Dictionary got: 67.3 ms, and 1.04 KB allocated memory.

Lookup got: 87.83 ms and 40966.16 KB allocated memory.

Linq got: 266.76 ms and 122897.76 KB allocated memory.

Conclusion

"... it may be not the most performant solution..."

Comparing smaller dataset runs with small data variety we can see the difference is pretty small. But in the 5 million records example, we see how much the self-made dictionary approach is superior to others. It's MUCH faster than the others and it allocates significantly less memory.

We can also observe how data variety in large data sets extends the execution time for each algorithm. For THE BEST approach which is a dictionary, we can see that it took 10x longer to end when the data set was not restricted to some range of values.

In conclusion, I have two thoughts.

It's time to recall old-school data structures and algorithms knowledge from university and stop to use LINQ everywhere where I can without thinking of performance, especially in a view of memory and execution time.

I would fail this interview miserably :)